Tools for the Toolbelt

I appreciate tools.

3D Printing

I recently got a 3D Printer (Bambo Labs A1 Mini, specifically). Man, what an awesome tool to add to the toolbox, especially for someone who makes puzzle boxes. 3D printing has turned out to be so much easier and cheaper than I would have ever guessed. I expected the learning & tinkering curve to be a much harder climb.

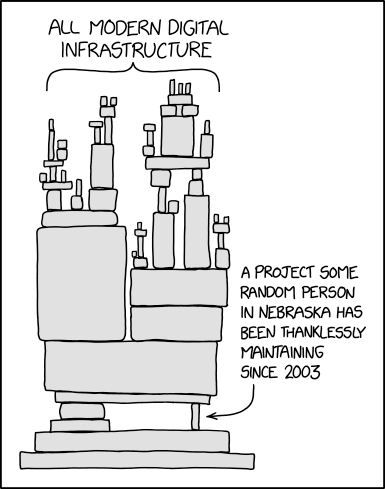

There’s this loop:

When you get into 3d printing, you start walking that loop over and over - and it never stops being satisfying.

Large Language Models

Until this point I’ve held LLM generative AIs like ChatGPT, Google’s Gemini, and other such products at arm’s length. It’s not that I think they aren’t useful. It’s not that I’m scared to give them information. It’s not that I’m scared they will take my data and take my job.

The reason I’ve been hesitant to use LLMs as a go-to tool for this or that is that they could go away at any time. They are a service being run by a few large technology companies and offered to us users for free. They are under no obligation to continue offering free access to these tools, and as of yet they are all operating these services at a loss. Nobody has yet figured out the model for turning my prompts1 into revenue for them exceeding the cost of operating the GPUs it takes to serve me responses. I don’t want to become dependent on a tool that’s so obviously subject to being taken away - either by Google and OpenAI deciding to shut their doors or by them deciding their AI chatbots are worth $200/month and not a penny less.

This comic doesn’t really apply in this situation, but I love XKCD so I’ll leave it here anyway.

How I Learned to Stop Worrying and Love the AI

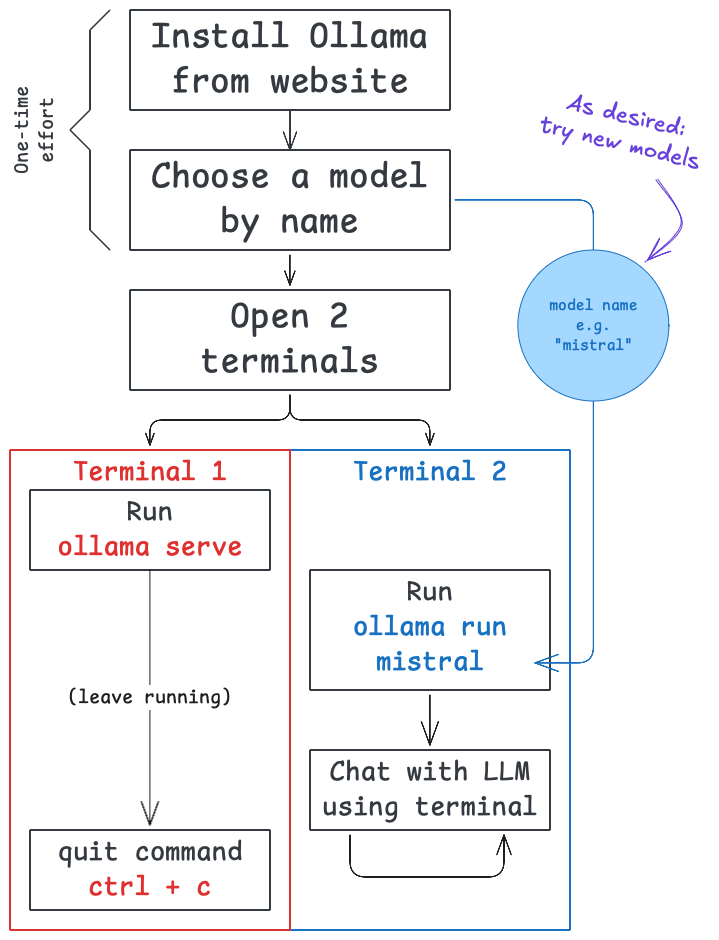

My fear of starting to incorporate LLMs in my problem-solving toolset has gone away. I learned that it’s free and easy to self-host fairly capable local large language models (LLLM, if you will). The tool ollama makes it fast and easy to download local models, run them, and interact with them. You have access to dozens of models, many of which comes with multiple size options that you can match with your hardware. Whether you have a GPU farm or not, you can definitely run something.

I can run models on my base-level M4 Mac Mini. That’s crazy. That’s also not going away.

Now, is it as good as the most recent ChatGPT? No. Not at all. But, conceptually, I could run something that’s pretty darn close. This relieves my fear of becoming “used to” working with a tool that might go away. It gets LLMs out of the rut that Notion got stuck in - the “what if this all goes away?” rut.

Now if only I could get my dependence on Google2.

Miscellaneous Thoughts

Andor

The second season to the Star Wars show “Andor” should have been called “Botheither”.

Grad School

Going back to school because you actually want to learn things is a wholly different experience than my first time through school. I am actually excited to start my next homework assignment. That’s a sentence that’s never left my mouth before.

I am, as of this writing, complete with the first 2 (of 10) courses in towards my Master’s in Data Analytics. So far so good.

Statistically Speaking

Humans are not good at statistical thinking. I think that’s inherent to the way our brains work, but I think it’s made worse by the language statisticians decided to use to talk about statistics. I think the words and phrases in use in statistics are actively hostile to us normies.

Take hypothesis testing for example - hypothesis testing is where you make a guess about a population for which you have some sample data. You use statistics to determine if your guess is plausibly true. Nothing crazy going on so far. Well, the way that’s “supposed” to be done is by stating your guess as a pair of contrasting hypotheses:

- The “Null Hypothesis” - what you assume is true

- The “Alternative Hypothesis” - what might be true & you’re using statistics on

Okay.

Well you do your stats. I’ll ignore the particulars about “degrees of freedom” and whatnot, but ultimately you either decide your guess is likely true or not… but how you’re “supposed” to state your result is:

Reject the null hypothesis.

or

Fail to reject the null hypothesis.

So basically, you’re saying “it’s not not true” if it’s true, and if it’s false you say “it’s not not not true”.

Maybe I’m stupid, but that is stupid.

Top 5: Things I’ve Used LLMs For

5. Jump starting my learning process on brewing Kombucha

4. Thinking through my note about the Subcategories of Health

3. Helping formulate my yearly theme

2. New Software How-Tos

1. Self-Contained Coding Tasks

Quote:

The best things in life are integer multiples of 42x42x7mm - Gridfinity Wiki